Always sort your object lists

I tend to get pretty lazy with my coding sometimes. My TODOs occasionally require more typing than it would take to actually do the TODO (I sometimes get lazy with the thinking part). So why would I recommend* (*insist on) sorting every list of objects in your system? Two reasons.

- It makes testing a whole lot easier

- You're less likely to have an unsorted list of items appear in your GUI

I have only just started doing this with my code* (* I may post a more educated and experienced blog later which includes the phrase 'it depends' when talking about when to sort lists) and it seems to be paying of so far.

Writing Tests on Sorted Lists

In the past I would put together a small 'IsInList' method to test that an object was in a list. Or I would create a 'GetObjectInList' to get an object and test it for expected values. The fact that the objects were stored in a random order of course made this necessary. The unsorted nature of the lists also meant the search had to be sequential which seemed amateurish (the performance implications were negligible since the lists were tiny, but...).

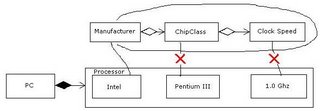

In any event, the nice thing about using a database is the ease of adding sorting. With a simple ORDER BY in MS Access I can sort on any column ascending or descending. Very little code. The .Net Framework also provides some simple and powerful ways to sort lists. Sorting my lists makes testing easier since object location is predictable. Testing is all about ensuring predictability anyway, right?

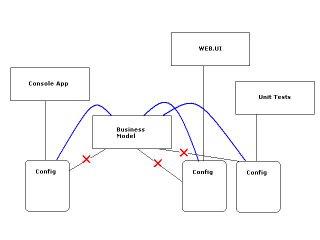

Your GUI lists should have default sort orders

If you have object lists and a GUI, you probably have list controls. I'll bet users are expecting data in those lists to be sorted either according to a scheme of their choosing, or in a commonly expected order. Ah, but if you use a layered design* (*like most kind-hearted developers) the question here becomes... where should sort logic exist? In the presentation layer or in the business model? I prefer a thin* (*super thin) presentation layer, the only code in the presentation layer should deal with form and page controls. I suggest then, that sorting objects is the responsibility of the model and is a business rule. Sorting is part of the analysis of data, like calculations. Testing logic in the GUI is also more difficult, so move as much logic as possible into to the model layer. If you are consciously sorting your object lists and your tests are checking this, there is very little chance of a nasty unsorted list control bug appearing in your gui (this happened to me once, and was caught by a customer! egad.)

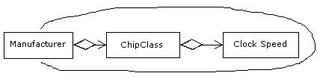

The .Net Framework offers some powerful sorting tools namely the IComparable interface, and Comparer objects. These two tools give you unlimited sorting power, easily and elegantly.